Will my favorite Android soon dream of sheep?

People use AI as a buzz-word to promote the Roomba vacuum cleaner: That’s a great sign! To be fair, it does fit into a definition of AI of a system that perceives the environment and can make intelligent decisions. The kind of decisions that a reasonable human would make. Now imagine if every camera-phone had the ability to “sense the environment” and make “intelligent decisions” that can anticipate and act meaningfully. Then AI comes as a way to help and enhance the lives of real intelligent beings: All of us!

AI is present in a system that perceives its environment and takes actions which maximize its chances of success. For example the next generation of sensor-enhanced mobile devices may use enough smarts to qualify as AI-based systems. At least that is what we are working very hard on doing at Fullpower.

AI is not just about systems that can learn. I think that for AI, what is more important is understanding the environment and making inferences that maximize chances of success. Learning can be part of the process. It is not necessary or sufficient. By the same token, natural language processing is not automatically AI. It can be. We can use AI techniques as part of a system that does natural language processing. But language is not automatically intelligence. It is communications.

For years, the Turing Test was seen as the criterium and the end of it all: If a human communicating using text messages with a machine wasn’t able to recognize that he/she was dialoguing with a machine, then that machine had to be “artificially intelligent”. The Turing test in my opinion is simply about building a machine good enough to be able to fool a human into believing that it is human through any text message interaction. It’s of course always an interesting exercise, but at the end of the day it does not attempt to truly emulate the advanced problem solving abilities of human intelligence. Let alone any form of “social-intelligence” or understanding of the environment via sensors for example. And conversely we can think of many humans who could fail the test themselves yet have “natural intelligence”. So the Turning test may just be an interesting exercise, but not a way to characterize machine or human intelligence.

As it is many times the case, I think that academia may have gotten a bit stuck with the LISP machines industry and with robots. The thought was to replace human intelligence and/or labor. However things are changing quickly and technology is moving by leaps and bounds. For example, when we all thought that robotics would allow American and European manufacturing to be more competitive, China has become the “factory of the world” without technology by leveraging an endless low-wage hard-working low-skilled workforce. Present day industrial robots are made of just a little bit of AI and a lot of electronics and mechanics. I’d take R2D2 any day! The world of sensor-enabled and enhanced devices with integrated inference engines has the greatest practical promises for AI’s long term success. Next generation robots get better!

Yes, I’d predict that most of the successful and useful advances will come from sensor-enabled devices and networks of such sensor enabled devices. Both will be important and make significant advances using sensor-enhanced solutions.

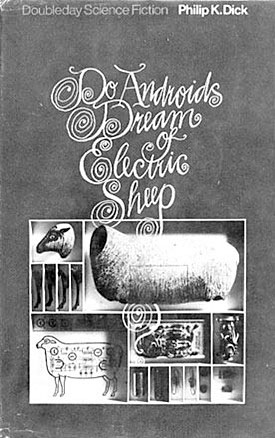

Yet, as Shakespeare eloquently says: “We are such stuff as dreams are made on.” Our robots and machines don’t dream yet. Or as Philip K. Dick’s masterpiece: “Do Androids Dream of Electric Sheep?” asks is the true test “emotional intelligence”?